Healthcare data is growing at a faster rate compared to any other industry globally. This data, which plays an instrumental role in patient diagnosis, comes from diverse medical sources, which include magnetic resonance imaging (MRI), computed tomography (CT), positron emission tomography (PET), genomics, proteomics, wearable sensor streams and electronic health records (EHRs) that vary in structure. Since the data sets differ from each other and have multiple dimensions, they can be hard to interpret in clinical settings, especially when putting together details from different formats.

Clinical settings also stand to benefit from smart technology solutions. Traditional methods of diagnosis rely heavily on human review, which has limitations, as clinicians get tired and can be subject to bias. This adds restrictions as clinical staff search for intricacies in structure or texture for early disease identification while juggling heavy caseloads.

In radiology, artificial intelligence (AI) and machine learning (ML) offer the potential to analyze data sets in a multi-dimensional way across diverse sources while revealing unidentified data points that were hidden in the texture. AI systems can find tumors, segment automatically, and track disease over time. Natural language processing (NLP) extends this ability by pulling clinical details from unstructured text, which not only improves records but could also enhance patient care. AI’s involvement does not mean replacing radiologists. It means implementing an intelligent system that augments human knowledge, interpretation and decision-making.

Challenges in High-Sensitivity Medical Image Analysis

AI for medical images has observed rapid progress. However, as with any developing technology, it faces certain technical and clinical hurdles.

Low Signal-to-Noise Ratio (SNR). Early disease signs often appear as indistinct, low contrast areas inside medical images. Design of the analog front-end and low-noise amplifiers can improve baseline fidelity. But noise from body movement, changes in diagnostic procedure, and outside interference persist. AI structures focus on denoising processes and signal-saving rules. Context-aware convolutional filters and nonuniform adaptive denoising models can reduce irrelevant noise while retaining small signs of disease.

Patient Data Variability. Human anatomy is diverse with variation in shape, position, and size across groups, which can conceal fine details. AI models need large, varied training data; this data must show differences in groups of people from various demographics, imaging procedures and equipment types. Transfer learning and domain adaptation plans need to be implemented. These plans can reduce false negatives/positives that come from such variation.

Small Structural Differences in Data. Small pixel differences, boundary bends, or unusual tissue patterns are often not easily detected by clinicians but are important for finding disease earlier and more accurately. AI-driven radiomics uses multiscale feature retrieval, texture plots, and precise segmentation structures, such as 3D CNNs and U-Nets, to find structural differences.

Complex High-Dimensional Data. Imaging methods like 3D MRI, PET-CT, and 4D CT create datasets that measure in terabytes for each study. When this data is processed all at once, computing processor speed can become a bottleneck. Efficient model learning needs ways to lower data size including autoencoders, principal component analysis (PCA), and graph neural networks to preserve the structure.

Multimodality Data Fusion. Combining anatomical (MRI), metabolic (PET), and molecular (genomics, lab results) data improves the accuracy of a diagnosis. However, lining up datasets with different resolutions and timestamps is challenging. An alternative way is to include attention-based networks that combine information, cross-modal transformers, and coregistration algorithms that keep features alike without losing detail.

Data Labeling. Expert labeling requires many resources and can be influenced by personal views. Semisupervised learning, active learning systems, and generative growth using GANs or diffusion models can be used instead to add variety to training while reducing variance within datasets caused by solely human labeling.

Dataset Bias. Trained models aren’t transferable due to interoperability issues including varied settings for acquiring data, different equipment types, and demographic biases. Federated learning frameworks capture scattered knowledge without gathering private patient data, which helps them work better everywhere while maintaining data security.

Clinical Integration. Interoperability across diverse clinical systems is essential for secure data transfer. All AI-driven systems should ideally generate similar results, featuring layers along with attention heatmaps, that radiologists can check against known clinical results. Without interoperability and transparency, AI models risk refusal, even if technically correct.

AI-Assisted Radiology Workflow: From Manual to Intelligent

The change from traditional to AI-assisted medical imaging is a big shift in oncology imaging, moving from a manual process to smart systems driven by data. This change can supplement traditional methodologies and can be implemented with help from high-performance computing.

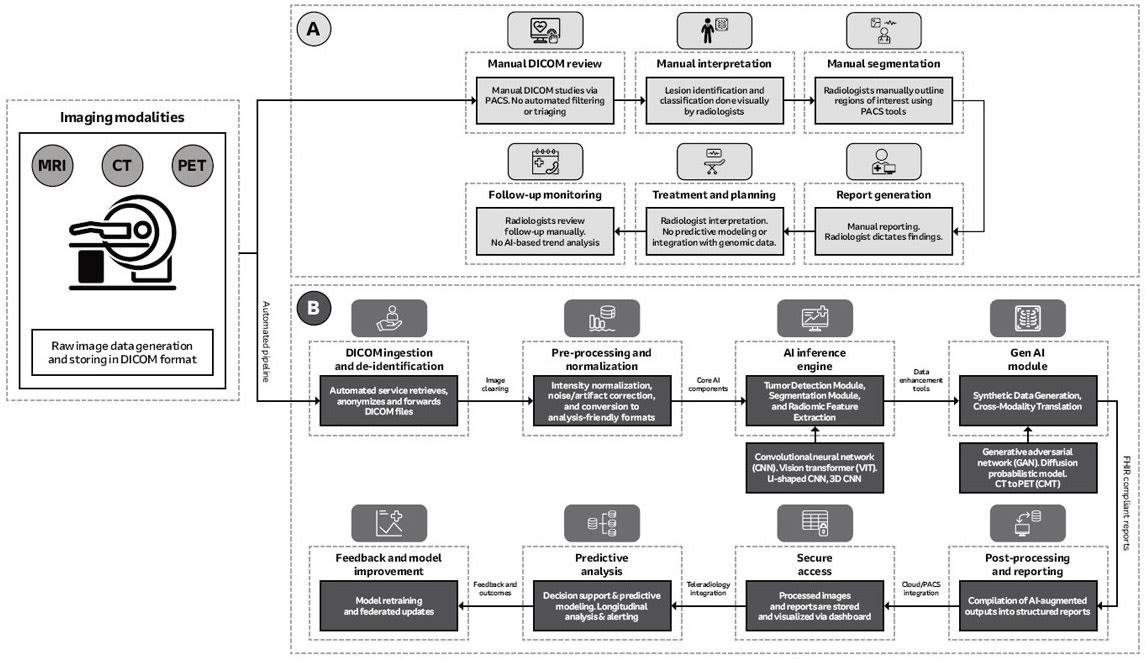

How Traditional Radiology Workflow Operates. The traditional oncology imaging pipeline is a manual and hardware-intensive process. Therefore, it is highly vulnerable to human errors and inconsistencies as noted below.

Image Acquisition. First, scanners (MRI, CT, PET) capture DICOM images. The capturing process requires precision components including low-noise instrumentation amplifiers (INAs) and high-resolution analog-to-digital converters (ADCs) built into the modality-specific hardware (for example, MRI magnets and coils; CT frame and multirow sensors).

Image Review and Interpretation. Radiologists then view images on high-resolution, DICOM-calibrated grayscale monitors within picture archiving and communication systems (PACS). Lesion identification, segmentation, and characterization are performed manually. This process of image diagnosis is heavily dependent on clinician experience and is susceptible to the possibility of user fatigue. Also, it is incapable of detecting subtle patterns, performing predictive modeling, or integrating multimodal data like genomics.

Reporting and Archiving. The PACS/RIS (radiology information system) relies on servers and secure networks (VLANs, QoS) for storage and transmission. The hardware is sound, but traditional reporting processes, which are often dictated and transcribed and can result in unstructured or semistructured findings that can be highly inconsistent. Traditional archiving is likewise limited by manual control and lack of automation along with no real-time feedback.

Potential of AI-Assisted Radiology Workflow

AI in radiology is transforming oncology imaging from a manual process into a smart and data-driven, end-to-end diagnostic system. This change depends on a sequence of computationally intense stages, each supported by not only specific hardware, but also advanced software.

Data Anonymization. The pipeline starts with automated ingestion and anonymization of DICOM data to comply with privacy rules and keep data accessible across networks. Edge servers or virtualized environments handle the processes; hardware security modules and load-balancing mechanisms often support them. Images then undergo artifact reduction, normalization, and denoising. This demands much computation with often parallel execution on multicore CPUs or GPUs and large system memory to process three- and four-dimensional datasets without reducing throughput.

Image Preprocessing and Normalization. Data then moves to deep learning modules for tumor detection, segmentation, and feature extraction. Convolutional neural networks, vision transformers, and 3D U-Nets can find lesions with great spatial exactness, including slight differences that human detection may miss. Accelerator-rich nodes connected by high-bandwidth, low-latency networking technologies are required, along with inference pipelines deployed in containers and orchestrated settings to enable scaling.

Deep Learning Models. Deep learning in medical imaging is heavily dependent on hardware computation. Multi-GPU servers and PCIe 4.0/NVLink can make it possible to have high-end processing. NVMe storage cuts latency while liquid cooling can stabilize thermals.

AI Models. Models like GANs, CNNs, ViTs, and 3D U-Nets would need efficient hardware and optimized runtimes. GPUs with mixed precision can boost speed. Docker and Kubernetes can ensure scalable deployment. Together, these enable efficient, reliable, and portable clinical imaging performance across diverse architectures.

Generative Modeling. Next, radiomics analysis focuses on biomarkers that describe how a tumor differs in shape in addition to how it grows. Generative models add to the process by creating synthetic data for rare cancer types and translating between imaging modalities. These models need large and distributed training.

Edge-to-Cloud Integration. Edge-to-cloud integration helps train models together across clinical settings while protecting patient data. Security protocols and encrypted messages allow only model updates, and not patient data, to transmit to servers, helping ensure AI systems improve while complying with ethical and regulatory requirements.

Clinical Workflow Integration. At the last stage, AI output enters into clinical workflows with structured reporting displays. Interactive dashboards show a three-dimensional view of lesion segments as standard templates help with electronic health records using data exchange protocols. Predictive analytics and decision-support modules add clinical value by giving real-time suggestions along with a radiologist’s reading.

Implementation Considerations

While architectural breakdown of AI in medical imaging is defined, its implementation requires advanced compute, storage, and networking systems. Balancing the speed of computation, scalability, privacy, and clinician usability is of utmost importance.

Automated DICOM Ingestion. Data pipelines must connect to hospital PACS, with edge servers or ingestion nodes with hardware security modules to protect patient health information. Automated data flow would reduce manual work, lower latency, and follow compliance requirements.

Image Preprocessing and Normalization. Preprocessing requires multicore CPUs with advanced vector extensions for sequential performance. For parallel tasks that run at the same time (e.g., bias field correction, noise removal), GPUs can perform better than CPUs. Handling 3D/4D volumes often requires more than 128 GB of RAM, so it does not use disk paging. Preprocessing pathways also adjust to different rules at various places, which need strong normalization methods.

Deep Learning Inference Engines. Running CNNs and vision transformers next to volume segmentation networks requires multi-GPU servers with high-bandwidth interconnects (PCIe 5.0, NVLink). Models that use a lot of memory (e.g., transformers) need professional GPUs with 40–128 GB VRAM each. Cooling and reliability also pose challenges — liquid cooling systems and redundant power supplies stay stable in data centers. For big hospital chains, AI accelerator boards (e.g., TPUs) can also optimize inference efficiency.

Synthetic Data and Cross-Modality Translation. Generative models use huge computation when developers train them, requiring distributed training among GPU clusters linked with low-latency networking. Implementations must weigh computation cost against patient benefit (e.g., giving preference to task-specific synthetic data augmentation, such as for uncommon tumors, instead of general dataset expansion).

Edge-to-Cloud Integration. To keep patient data private, edge nodes should be trained to send only model updates to cloud servers. This needs security and encrypted links along with federated control systems. Such infrastructure also reduces institutional data silos while improving model robustness across sites.

Visualization and Clinical Reporting. Good implementation ultimately means easy-to-use interfaces with shareable results, like heatmaps, along with clear diagnosis summaries. Dashboards with interactive 3D visualization would require high-resolution image rendering, supported by workstations with separate GPUs for smooth interaction, and reports must follow FHIR standards to be compatible with EHR systems.

Storage and Networking Infrastructure. Networks must move data quickly and in large volumes, especially in federated learning systems. Large radiology imaging datasets need layered storage solutions that combine fast NVMe drives for current data with object storage for old data. Hospitals can use hybrid setups that blend local computers with scalable long-term cloud storage.

Conclusion

AI in medical imaging means embracing a paradigm shift from manual, perception-focused interpretation to technology-enabled, data-augmented diagnosis. A balanced approach of high computation with federated learning and generative augmentation could offer tangible benefits. Multi-layered infrastructure with secure ingestion nodes, GPU-accelerated inference servers, distributed generative training platforms, edge-to-cloud frameworks, and intuitive clinician interfaces are essential building blocks.

The future of diagnostic imaging is not in small improvements but rather complete revamp of the radiology workflow to incorporate a data-driven, predictive ecosystem. This amalgamation of AI algorithms with dedicated high-performance hardware offers the foundation for personalized, scalable, and more standardized medical diagnostics. While implementation challenges can be gradually addressed, AI-augmented radiology will transition from experimental deployment to a mainstream standard of care, reshaping the landscape of predictive and preventive medicine.

This article was written by Purva Shah, a product marketing manager for the medical device practice of eInfochips, an Arrow Electronics company. For more information, visit here .